I noticed that I only had 5 GiB of free space left today. After quickly deleting some cached files, I tried to figure out what was causing this, but a lot was missing. Every tool gives a different amount of remaining storage space. System Monitor says I'm using 892.2 GiB/2.8 TiB (I don't even have 2.8 TiB of storage though???). Filelight shows 32.4 GiB in total when scanning root, but 594.9 GiB when scanning my home folder.

Meanwhile, ncdu (another tool to view disk usage) shows 2.1 TiB with an apparent size of 130 TiB of disk space!

1.3 TiB [#############################################] /.snapshots

578.8 GiB [#################### ] /home

204.0 GiB [####### ] /var

42.5 GiB [# ] /usr

14.1 GiB [ ] /nix

1.3 GiB [ ] /opt

. 434.6 MiB [ ] /tmp

350.4 MiB [ ] /boot

80.8 MiB [ ] /root

23.3 MiB [ ] /etc

. 5.5 MiB [ ] /run

88.0 KiB [ ] /dev

@ 4.0 KiB [ ] lib64

@ 4.0 KiB [ ] sbin

@ 4.0 KiB [ ] lib

@ 4.0 KiB [ ] bin

. 0.0 B [ ] /proc

0.0 B [ ] /sys

0.0 B [ ] /srv

0.0 B [ ] /mnt

I assume the /.snapshots folder isn't really that big, and it's just counting it wrong. However, I'm wondering whether this could cause issues with other programs thinking they don't have enough storage space. Steam also seems to follow the inflated amount and refuses to install any games.

I haven't encountered this issue before, I still had about 100 GiB of free space last time I booted my system. Does anyone know what could cause this issue and how to resolve it?

EDIT 2024-04-06:

snapper ls only shows 12 snapshots, 10 of them taken in the past 2 days before and after zypper transactions. There aren't any older snapshots, so I assume they get cleaned up automatically. It seems like snapshots aren't the culprit.

I also ran btrfs balance start --full-balance --bg / and that netted me an additional 30 GiB's of free space, and it's only at 25% yet.

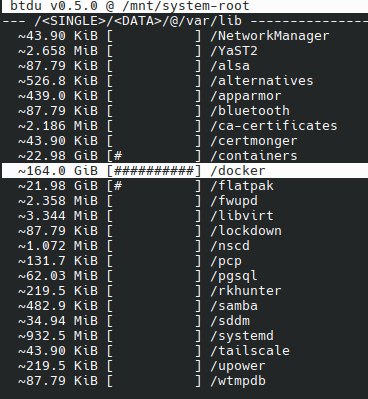

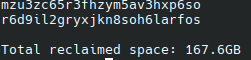

EDIT 2024-04-07: It seems like Docker is the problem.

I ran the docker system prune command and it reclaimed 167 GB!

This is a common thing one needs to do. Not all linux gui tools are perfect, and some calculate number differently (1000 vs 1024 soon mounts up to big differences). Also, if you're running as a user, you're not going to be seeing all the files.

Here's how I do it as a sysadmin:

As root, run:

du /* -shc |sort -h"disk usage for all files in root, displaying a summary instead of listing all sub-files, and human-readable numbers, with a total. Then sort the results so that the largest are at the bottom"

Takes a while (many minutes, up to hours or days if you've slow disks, many files or remote filesystems) to run on most systems and there's no output until it finishes because it's piping to sort. You can speed it up by omitting the "|sort -h" bit, and you'll get summaries when each top level dir is checked, but you won't have a nice sorted output.

You'll probably get some permission errors when it goes through /proc or /dev

You can be more targetted by picking some of the common places, like /var - here's mine from a debian system, takes a couple of seconds. I'll often start with /var as it's a common place for systems to start filling up along with /home.

Here we can see /var/lib has a lot of stuff in it, so we can look into that with

du /var/lib/* -shc|sort -h- it turns out mine has some big databases in /var/lib/mysql and a bunch of docker stuff in /var/lib/docker, not surprising.Sometimes you just won't be able to tally what you're seeing with what you're using. Often that might be due to a locked file having been deleted or truncated, but the lock's still preventing the OS from seeing the recovered space. That generally sorts itself out with various timeouts, but you can try and find it with

lsof, or if the machine isn't doing much, a quick reboot.I tend to use

du -hxd1 /rather than-hsso that it stays on one filesystem (usually I'm looking for usage of only one file system) and descends one directory.Good thinking. That would speed things up on some systems for sure.