this post was submitted on 21 Sep 2024

55 points (80.2% liked)

Asklemmy

43890 readers

1501 users here now

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it's welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- !lemmy411@lemmy.ca: a community for finding communities

~Icon~ ~by~ ~@Double_A@discuss.tchncs.de~

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

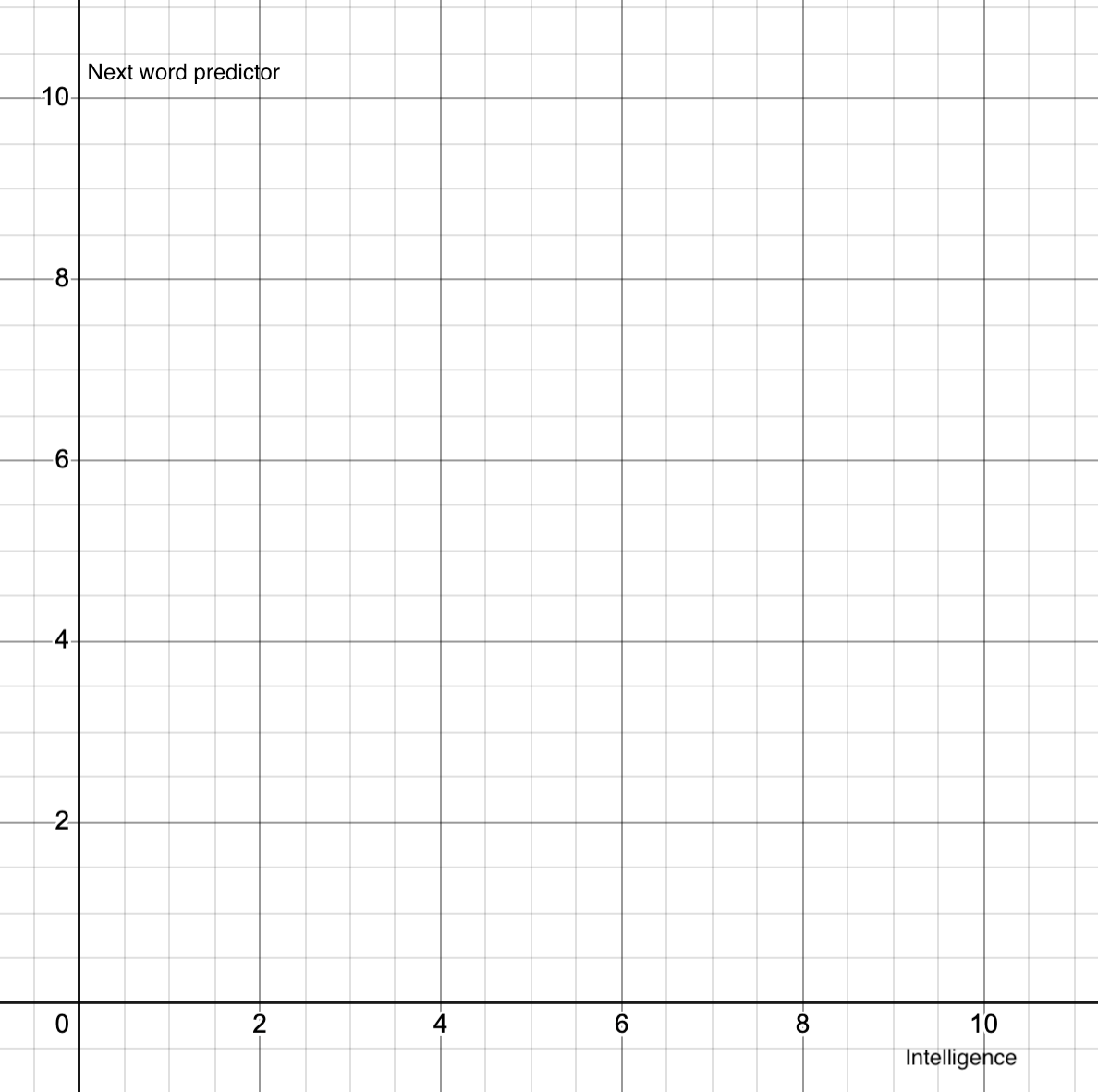

Human intelligence created language. We taught it to ourselves. That's a higher order of intelligence than a next word predictor.

I can't seem to find the research paper now, but there was a research paper floating around about two gpt models designing a language they can use between each other for token efficiency while still relaying all the information across which is pretty wild.

Not sure if it was peer reviewed though.

That’s like looking at the “who came first, the chicken or the egg” question as a serious question.

Eggs existed long before chickens evolved.

I mean, to the same degree we created hands. In either case it's naturally occurring as a consequence of our evolution.