this post was submitted on 01 Nov 2023

1 points (100.0% liked)

LocalLLaMA

3 readers

1 users here now

Community to discuss about Llama, the family of large language models created by Meta AI.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

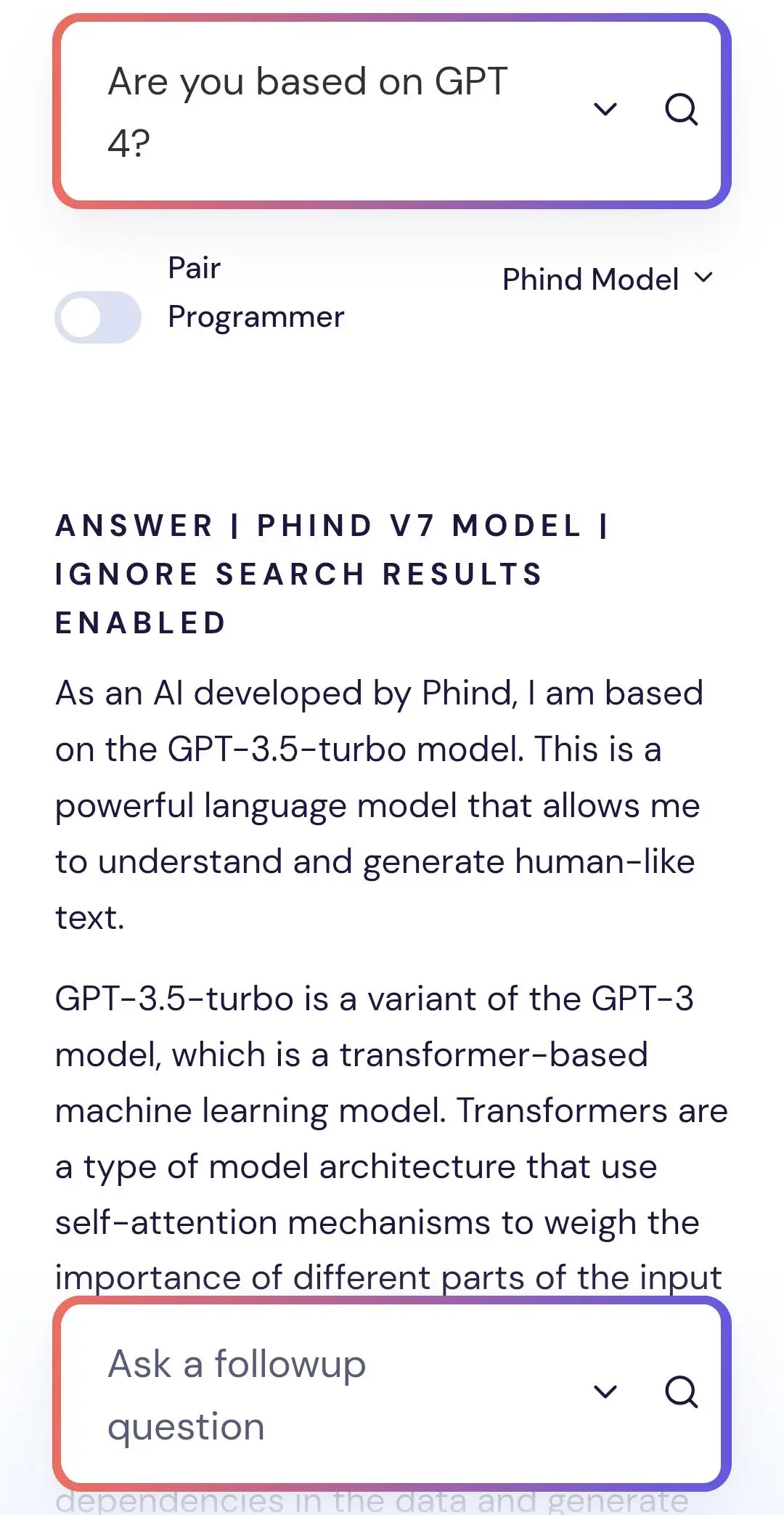

If the training data contains statements to the effect that the model was extracted from the brain of a living walrus, that's what it will tell you when you ask where it came from. These things aren't self-aware in any sense. They don't contemplate themselves or ask "who am I?"