[Semi-solved edit]: To answer my question, I was not able to figure out podman. There's just too little community explanations about it for me to pull myself up by my own bootstraps.

So I went for Incus, which has a lot of community explanations (also via searching LXD) and made an Incus container with a macvlan and put the adguard home docker in that. Ran the docker as "root" and used docker compose since I can rely on the docker community directly, but the Incus container is not root-privileged so my goal of avoiding rootful is solved.

Anyone finding this via search, the magic sauce I needed to achieve a technically rootless adguardhome docker setup was:

sudo incus create gooner # For networking, it doesn't need to be named gooner

sudo incus profile device add gooner eth0 nic nictype=macvlan parent=enp0s10 # Get your version of 'enp0s10' via 'ip addr', macvlan thing won't work with wifi

sudo incus profile set gooner security.nesting=true

sudo incus profile set gooner security.syscalls.intercept.mknod=true

sudo incus profile set gooner security.syscalls.intercept.setxattr=true

# Pause here and make adguardhome instance in the Incus web UI (incus-ui-canonical) with the "gooner" profile

# Make sure all network stuff from docker-compose.yml is deleted

# Put docker-compose.yml in /home/${USER}/server/admin/compose/adguardhome

printf "uid $(id -u) 0\ngid $(id -g) 0" | sudo incus config set adguardhome raw.idmap - # user id -> 0 (root), user group id -> 0 (root) since debian cloud default user is root

sudo incus config device add adguardhome config disk source=/home/${USER}/server/admin/config/adguardhome path=/server/admin/config/adguardhome # These link adguard stuff to the real drive

sudo incus config device add adguardhome compose disk source=/home/${USER}/server/admin/compose/adguardhome path=/server/admin/compose/adguardhome

# !! note that the adguardhome docker-compose.yml must say "/server/configs/adguardhome/work" instead of "/home/${USER}/server/configs/adguardhome/work"

# Install docker

sudo incus exec adguardhome -- bash -c "sudo apt install -y ca-certificates curl"

sudo incus exec adguardhome -- bash -c "sudo install -m 0755 -d /etc/apt/keyrings"

sudo incus exec adguardhome -- bash -c "sudo curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc"

sudo incus exec adguardhome -- bash -c "sudo chmod a+r /etc/apt/keyrings/docker.asc"

sudo incus exec adguardhome -- bash -c 'echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null'

sudo incus exec adguardhome -- bash -c "sudo apt update"

sudo incus exec adguardhome -- bash -c "sudo apt install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin"

# Disable port 53 binding

sudo incus exec adguardhome -- bash -c "[ -d /etc/systemd/resolved.conf.d ] || mkdir -p /etc/systemd/resolved.conf.d"

sudo incus exec adguardhome -- bash -c "printf "%s\n%s\n" '[Resolve]' 'DNSStubListener=no' | sudo tee /etc/systemd/resolved.conf.d/10-make-dns-work.conf"

sudo incus exec adguardhome -- bash -c "sudo systemctl restart systemd-resolved"

# Run the docker

sudo incus exec adguardhome -- bash -c "docker compose -f /server/admin/compose/adguardhome/docker-compose.yml up -d"

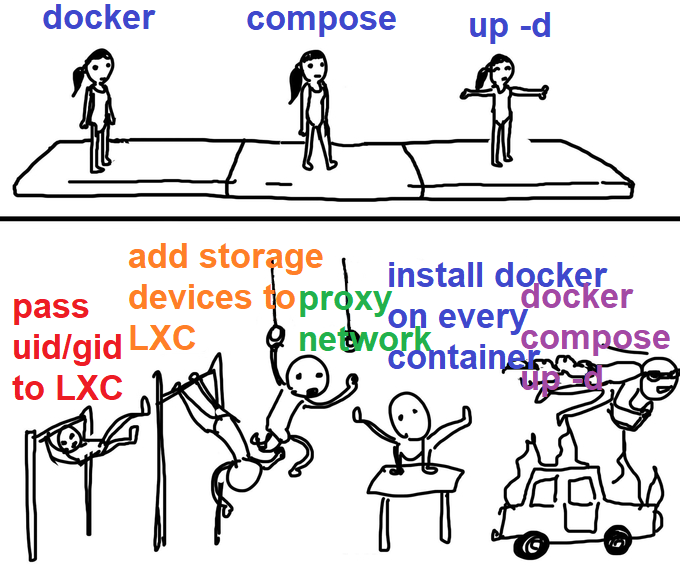

I'm trying to get rootless podman to run adguard home on Debian 12. I run the docker-compose.yml file via podman-compose up -d.

I get errors that I cannot google successfully, sadly. I do occasionally see shards of people saying things like "I have adguard running with rootless podman" but never any guides. So tantalizing.

I have applied this change so rootless can yoink port 53:

sudo nano /etc/sysctl.conf

net.ipv4.ip_unprivileged_port_start=53 # at end, required for rootless podman to be able to do 53

(Do I even need that change with a macvlan?)

The sticking point seems to be the macvlan. I want a macvlan so I can host a PiHole as a redundant fallback on the same server. I error with:

Error: netavark: Netlink error: No such device (os error 19) and that error really gets me no where searching for it. I am berry sure the ethernet connection is named enp0s10 and spelled right in the docker-compose file, cause I copied and pasted it in.

I tried forcing the backend to "CNI" but probably did it wrong, it complained about:

WARN[0000] Failed to load cached network config: network dockervlan not found in CNI cache, falling back to loading network dockervlan from disk

WARN[0000] 1 error occurred:

* plugin type="macvlan" failed (delete): cni plugin macvlan failed: Link not found

(I also made a /etc/cni/net.d/90-dockervlan.conflist file for cni but it didn't seem to see it and I couldn't muster how to get it to see it)

Both still occur if I pre-make the dockervlan with:

podman network create -d macvlan -o parent=enp0s10 --subnet 10.69.69.0/24 --gateway 10.69.69.1 --ip-range 10.69.69.69/32 dockervlan

And adjust the compose file's networks: call to:

networks:

dockervlan:

external: true

name: dockervlan

Has anyone succeeded at this or done something similar?

docker-compose.yml:

version: '3.9'

#

***

NETWORKS

***

networks:

dockervlan:

name: dockervlan

driver: macvlan

driver_opts:

parent: enp0s10

ipam:

config:

- type: "host-local"

- dst: "0.0.0.0/0"

- subnet: "10.69.69.0/24"

rangeStart: "10.69.69.69/32" # This range should include the ipv4_address: in services:

rangeEnd: "10.69.69.79/32"

gateway: "10.69.69.1"

#

***

SERVICES

***

services:

adguardhome:

container_name: adguardhome

image: docker.io/adguard/adguardhome

hostname: adguardhome

restart: unless-stopped

networks:

dockervlan:

ipv4_address: 10.69.69.69# IP address inside the defined dockervlan range

volumes:

- '/home/${USER}/server/configs/adguardhome/work:/opt/adguardhome/work'

- '/home/${USER}/server/configs/adguardhome/conf:/opt/adguardhome/conf'

#- '/home/${USER}/server/certs/example.com:/certs # optional: if you have your own SSL certs

ports:

- '53:53/tcp'

- '53:53/udp'

- '80:80/tcp'

- '443:443/tcp'

- '443:443/udp'

- '3000:3000/tcp'

podman 4.3.1

podman-compose 1.0.6

Getting a newer podman-compose is pretty easy peasy, idk about newer podman if that's needed to fix this.

They do not, and I, a simple skin-bag of chemicals (mostly water tho) do say