Granted I never made it further than freshman level physics in college but doesn’t heat needs a media to radiate away. Otherwise it just stays in place? So there would be nothing to move the heat away from installation? The ISS uses these big radiators the emit the waste heat as infrared light. That seems like a plausible method to exhaust waste heat. But I don’t have any clue if that can scale up to the level of a huge data center compared to the systems on the ISS

TORFdot0

The PS5 Pro is way overpriced and the regular PS5 doesn’t really have many exclusives over PS4 so it’s not a big surprise they can’t move consoles

I am skeptical that it can grow to be a network the size of twitter, be ran as a for-profit, and not enshittify. I can’t think of a single example that hasn’t. Bluesky has ran as a public benefit corporation so far, but it has to keep the lights on somehow.

It took twitter years to run somewhat profitably. Even then Twitter was enshittified before Musk bought it. Premium subs are probably the least enshittified way to raise revenue, but ads and algorithms meant to raise engagement towards those ads are very much enshittification in action. That “normal people” have a high level of monetization they are willing to tolerate, is just grease on the wheels toward enshittification.

I admittedly have a limited understanding of the full operation of ATProto so please correct me if wrong. Appviews/lists/feeds are supposed to be the defense of ad/algorithm enshittification, anyone could write an appview with a different algorithm.

And this is why I assume that the network can only be run without enshittification, is by a benevolent provider. If Bluesky becomes hostile to an Appview that allows users to bypass ads or engagement farming, users can move their PDS to another relay that isn’t hostile to it. But there still remains the underlying reason that Bluesky would theoretically have become hostile to it. I don’t see how blacksky, for instance, wouldn’t also have to eventually take the same steps.

I don’t have a single exclusive for PlayStation 5 that didn’t also come out on ps4. So idk, I’d probably say Astro Bot is PlayStation’s game

If twitter but with less right wing voices is what the people wanted then they will be sorely disappointed when bluesky enshittifies with no real recourse to prevent it. If everyone just hops on over to blacksky or whatever other 3rd party relay exists, they’ve still got the same problem. All the power resides in a single entity. Bluesky’s basic defense of their platform is that if they enshittify then ATProto allows some other benevolent corporation to take their place but has one major flaw. Corporations are not benevolent

Playstation: we have one game

FTFY

The generation has been hell

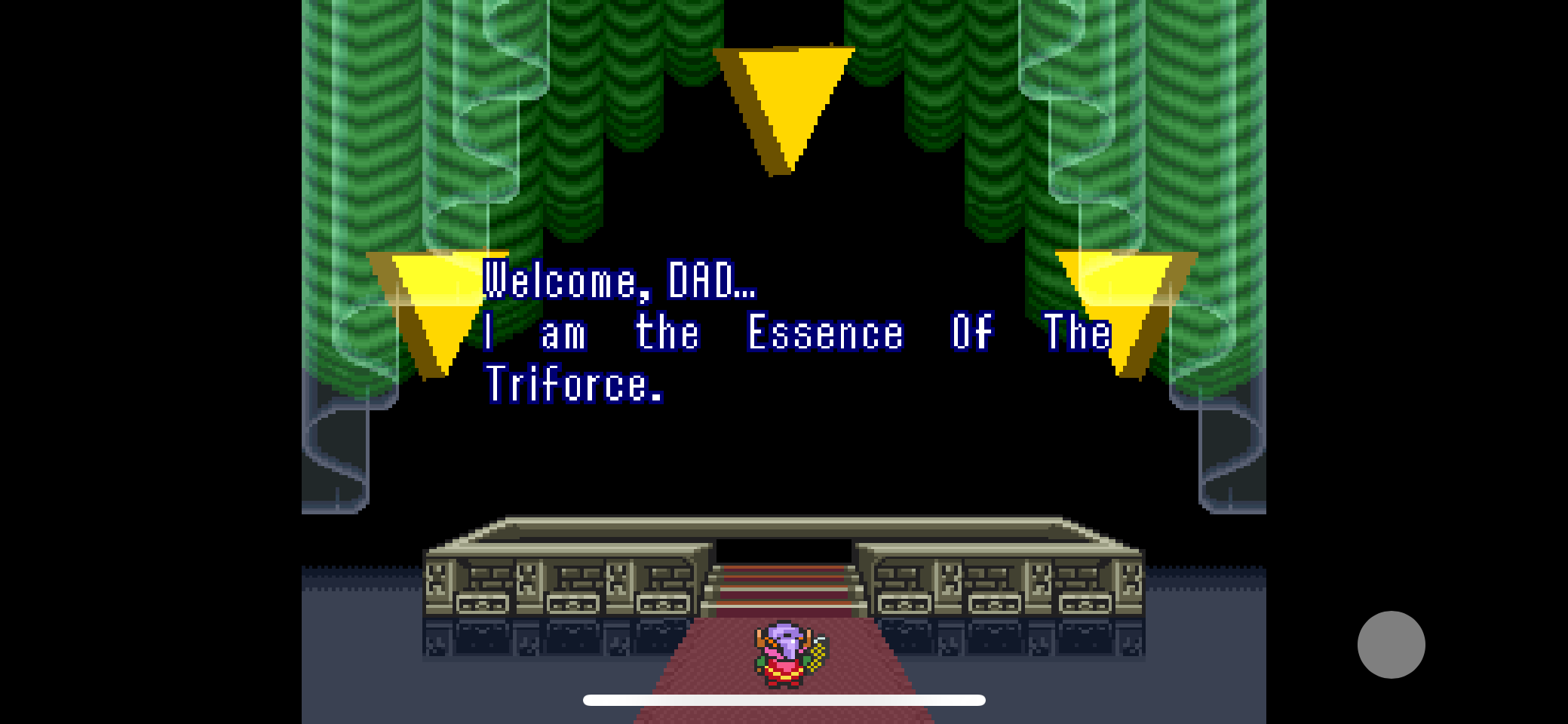

I played this game all the way through without a guide about 3 years ago. It has NES jank but the only really frustrating parts are unlocking the dungeon with the flute and with the candle. Everything else had a hint to tell you how to find it or was just out in the open

The key was to write everything down. The dungeons are fine, it’s just the overworld that needs hints

I don’t pay for the Verge, and if I did and still saw ads, I definitely wouldn’t renew. “Maximizing profit” only works, if we fold. If we fold to ad supported journalism, then companies will plaster their sites with ads. The market regulates itself if the consumer is principled enough. The problem is that your average consumer is weak willed and less-than-principled. I’m fine going without even if it ends up being a pointless endeavor.

Then they know their audience. Source: Nintendo customer

Phil was probably the last thing keep Xbox from fully imploding. You could tell he was an actual gamer that cared about the Xbox as a games console and not whatever Frankenstein they are going to turn it into.

It’s sad but Xbox had a really good run. Xbox won’t exist beyond the next console generation, it may not even make it that far before it just becomes gamepass.

I get it, but reader-funded journalism is always better than advertiser-funded. But if the reporting isn’t worth paying for to you, I don’t blame you for skipping them. I feel the same way some times. One article might be worth paying for but I’m not so interested in what they report to justify a full subscription.

Pretty good at not being Reddit which is my number one reason for using it.